Hadoop is hard and if you’ve ever tried to code in MapReduce or manage a Hadoop cluster running production jobs, you know what I mean. Apache Spark has promise and simplifies some of Hadoop’s complexities but also brings its own challenges.

The good news is regardless of technology, there are more and more successful Big Data implementations where organizations of various sizes and industries are in production with Hadoop and Spark applications. We are in a somewhat unique position where, not only, do we have years of Hadoop technology experience as the team behind Cascading but we are exposed to how our 10,000+ customers are building, managing, monitoring and operationalizing their environments and the challenges they have faced.

Now, we get to share the journey many of them took, and are taking, to achieve operational readiness on Hadoop and meet business demands for service quality. In this blog series, we will share the 6 “a-ah moments” we see almost every organization go through on their journey to production readiness on Hadoop. With any luck, by sharing this, it will help you avoid some common operational pitfalls.

Discovery #1: We Have a Significant Visibility Problem

Yes, there are lots of top-notch cluster management and monitoring solutions out there. With them you can look into performance metrics for the cluster like CPU utilization, disk performance, I/O, etc. As far as these solutions are concerned, there is no question about value provided when examining these cluster performance metrics.

But what about application execution performance? Do these tools provide these kind of insights?

Framework and cluster monitoring solutions don’t address the overwhelming lack of visibility into the performance of Hadoop applications, once placed into production. Specifically, they fail to point out or even measure the necessary information to appropriately troubleshoot application performance. Without the right visibility, teams are left to accept the reality that their applications may not perform as expected in production and they probably won’t know until it’s too late.

Discovery #2: Organizing Correctly Ensures Production Readiness

The nature of Hadoop means it often started its life as a series of science experiments. Once it has proven to be successful in either lower costs or opening up new revenue generating opportunities, those experiments are moved to production. Simple. Right? Well, not always. If you have a handful of applications, maybe it is this simple but what we hear is production problems with quality of service begin to arise quickly if roles and responsibility are left to chance. Does the development team just manage everything? Should we setup up a special operations team to manage the cluster or should IT operations do it? Who owns the applications if the cluster is managed centrally? It didn’t matter if it was a handful of teams running hundreds or thousands of jobs per day or just a handful of business critical jobs.

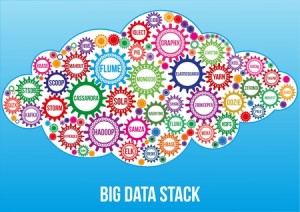

Discovery #3: Our Success Means Our Environment Is Increasingly Chaotic and Unreliable

The proliferation of fit-for-purpose technologies means most environments will be a heterogeneous hot bed of different technologies. Almost everyone we speak too has some combination of MapReduce, Hive, Cascading, Scalding, Pig, Spark. They use Oozie, Control-M, Kron, etc. for scheduling. They want to move some applications to Apache Tez, Apache Flink, and, of course Apache Spark. The list goes on. It seems every week there is a new project announced and there is no indication this is slowing down any time soon.

The proliferation of fit-for-purpose technologies means most environments will be a heterogeneous hot bed of different technologies. Almost everyone we speak too has some combination of MapReduce, Hive, Cascading, Scalding, Pig, Spark. They use Oozie, Control-M, Kron, etc. for scheduling. They want to move some applications to Apache Tez, Apache Flink, and, of course Apache Spark. The list goes on. It seems every week there is a new project announced and there is no indication this is slowing down any time soon.

Different technologies inherently mean each one has its own set of monitoring tools. In a homogenous environment that’s great. In a heterogeneous technology environment, it sucks. It just adds to the confusion and chaos about what is actually happening to the performance of your applications.

Some applications could be behaving differently in production than expected, scheduled jobs are processing more data than usual, or maybe someone has just submitted a massive ad-hoc query. Because you can’t see what is happening and by whom, it can be extremely difficult to maintain reliable/predicable performance and slowdowns become more frequent.

Discovery #4: Wow! It Takes a Really Long Time to Troubleshoot a Problem

The truth is that no app will maintain perfect performance in its lifetime. Unfortunately, current methods for troubleshooting in the Hadoop environment can be a painstaking, time-intensive task.

Think about the amount of time it can take teams to essentially sift through job tracker and log files. Hours of mind-numbing investigation just to find what could be potentially a single or countless lines of code. Finding these needles in the haystack can be done, but there is no getting around how much time it can eat up.

Discovery #5: We are Not Properly Aligned to Business Priorities

Things will eventually go wrong. The important thing to keep in mind when things go wrong is comprehending the downstream impact across the organization and adapt. Right now, businesses are failing in this regard and have a poor understanding of these impacts.

Submitting an application to run means it’s then broken up into small jobs and tasks so those units of work can be distributed across the cluster for processing.

From a performance perspective, this is fantastic. From a monitoring perspective? Not so great.

Adding to the list of shortcomings associated with other tools, modern monitoring solutions just fail to provide critical insights into where your jobs or tasks went wrong.  So while these monitoring tools will most certainly say which jobs failed, teams are left staring at a daunting pile of job failures. Not knowing where in the application the failure occurred, all that can be done is to once again sift through log files to try to find the culprit.

So while these monitoring tools will most certainly say which jobs failed, teams are left staring at a daunting pile of job failures. Not knowing where in the application the failure occurred, all that can be done is to once again sift through log files to try to find the culprit.

Depending on where the failure occurred, all subsequent jobs will also fail. This blindness means that teams won’t be able to identify who owns that application, what service level agreement will be missed or what data-centric business process won’t be carried out.

Teams are left wondering things like, “What happened to our data set?”

Pondering such a mystery will over time undermine the confidence of the team, increasing a hesitation for adoption as well as delaying production readiness.

Discovery #6: Reporting is Even Harder than Troubleshooting

As many teams might do, they’ll often relegate data lineage and audit reporting to a backend reporting task to be thought about later. This causes headaches down the road because the information you need for audit reporting, data lineage tracking and regulatory compliance is spread across logs and systems. Its up to you to piece everything back together in a way that will meet requirements.

What you’ll ideally do is set up the necessary mechanisms to capture and store the required application metadata and performance metrics on the front-end. This will enable data lineage and audit reporting to be a simply 2-click reporting task on the backend. The challenge, once again, is getting access to the right level of visibility to capture the right metadata as existing tools are of little value here.

Over the next several posts, we will go into details about each of these discoveries and what leading organizations are doing to address these operational challenges.

SHARE: